[ad_1]

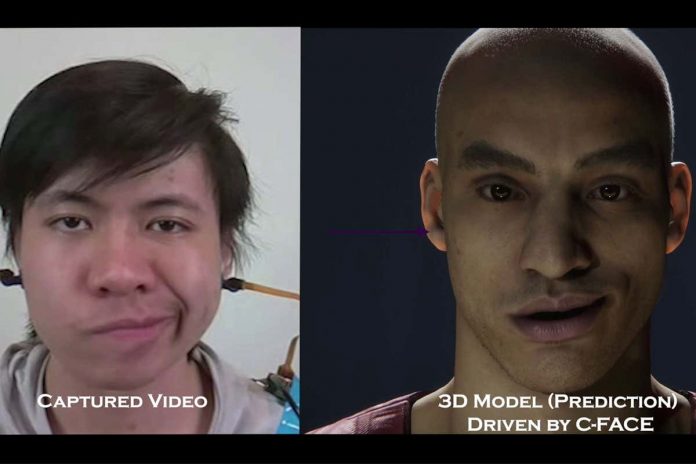

A wearable device consisting of two mini-cameras mounted on earphones can recognise your facial expressions and read your lips, even if your mouth is covered.

The tool – called C-Face – was developed by Cheng Zhang at Cornell University in Ithaca, New York, and his colleagues. It looks at the sides of the wearer’s head and uses machine learning to accurately visualise facial expressions by analysing small changes in cheek contour lines.

“With previous technology to reconstruct facial expression, you had to put a camera in front of you. But that brings a lot of limitations,” says Zhang. “Right now, many people are wearing a face mask, and standard facial tracking will not work. Our technology still works because it doesn’t rely on what your face looks like.”

The researchers think their device could be used to project facial expressions in virtual reality, as well as tracking emotions and assisting with digital lip-reading for people with hearing impairments.

Advertisement

Zhang and his colleagues tested C-Face in nine volunteers. When compared with a library of images taken with a front-positioned camera, the device accurately predicted facial arrangements for different expressions (measured by changes at 42 points on the face) to within 1 millimetre. The researchers will present their work at the virtual ACM Symposium on User Interface Software and Technology next week.

C-Face is still at an early stage and there are technical problems, such as battery life, that need to be overcome before it can be rolled out more widely. Zhang also acknowledges that ensuring user privacy could be a problem. “There’s so much private information we can get from this device,” he says. “I think that’s definitely one issue we need to address.”

More on these topics:

[ad_2]

Source link